Introduction:

TensorFlow has revolutionized the field of machine learning and deep learning with its open-source nature and powerful capabilities.

Developed by Google, TensorFlow provides a comprehensive framework for building, training, and deploying machine learning models, particularly deep neural networks. In this article, we will delve into the world of deep learning with TensorFlow, covering key concepts and practical applications.

What is Tensorflow?:

TensorFlow is a machine learning library that was first introduced by Google's Brain team in 2015. It's designed to facilitate the development of machine learning and deep learning models by providing a flexible and efficient framework. TensorFlow is particularly well-known for its ability to work seamlessly with neural networks, making it a popular choice for researchers and engineers in the field of artificial intelligence.

TensorFlow's name is derived from the concept of tensors, which are multi-dimensional arrays. These tensors are fundamental building blocks for data representation in TensorFlow, enabling the library to handle a wide range of data types and structures. TensorFlow's versatility, from numerical computations to natural language processing, makes it a go-to tool for various machine learning tasks.

Deep Learning and TensorFlow:

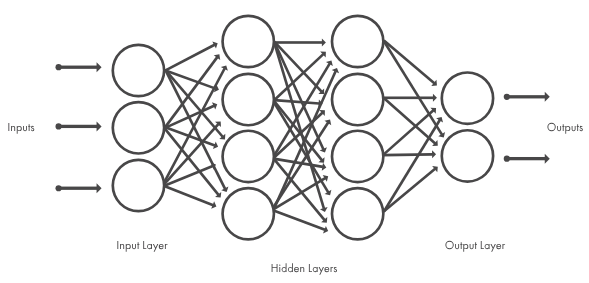

Deep learning is a subset of machine learning that focuses on neural networks with multiple layers, known as deep neural networks. TensorFlow is exceptionally well-suited for deep learning because it provides a high-level API for constructing complex neural network architectures effortlessly.

Deep learning models built with TensorFlow have found applications in diverse fields, from computer vision to natural language processing. For instance, convolutional neural networks (CNNs) implemented in TensorFlow have achieved remarkable success in image classification tasks, while recurrent neural networks (RNNs) have excelled in sequence-based tasks like language modeling and speech recognition.

Data Preparation in TensorFlow:

This involves collecting, cleaning, and structuring the data for model training and evaluation. TensorFlow offers a range of tools and functions to streamline this process.

One of the essential steps in data preparation is splitting the dataset into training and testing subsets. The training set is used to teach the model, while the testing set is reserved for evaluating the model's performance on unseen data.

Training Models in TensorFlow:

Training deep learning models in TensorFlow involves specifying the architecture of the neural network, defining a suitable loss function, and selecting an optimization algorithm. The model learns to minimize the loss by adjusting its internal parameters during training.

For instance, we can create a sequential model by stacking layers sequentially or design complex custom models using the Functional API.

During training, TensorFlow keeps track of the model's performance, and you can monitor progress using TensorBoard, a visualization tool that helps you analyze metrics like loss and accuracy over time.

Layer Formation and Model Architecture:

This process involves determining the type and number of layers, choosing appropriate activation functions, and deciding if dropout layers or batch normalization layers are needed.

Activation functions, such as ReLU (Rectified Linear Unit) and Sigmoid, introduce non-linearity to the model, allowing it to learn complex relationships within the data. The arrangement of layers, commonly referred to as the model's architecture, can vary significantly based on the problem you are solving.

The choice of architecture is often influenced by the characteristics of the training data. For example, a convolutional neural network (CNN) is ideal for image data, while a recurrent neural network (RNN) may be used for sequential data like text or time series.

Common Layer Names:

- Dense Layer

- Convolutional Layer

- Pooling Layer

- Dropout Layer

- Recurrent Layer

- Batch Normalization Layer

- Softmax Layer

- Flatten Layer

- Relu Layer

- Activation Layer

Each layer has own functionalities based on training data we need to use the layers.

Using Trained Models:

Once you have trained a deep learning model in TensorFlow, you can use it for making predictions on new or test data. TensorFlow provides methods to save and load model weights and configurations, ensuring that your trained model can be easily deployed and reused.

Using a trained model for inference is typically a straightforward process. You input new data into the model, and it generates predictions based on the patterns it has learned during training. This predictive capability has a wide range of applications, from image recognition and natural language understanding to recommendation systems and autonomous vehicles.